EyeSkills Feature demonstration / user test with Cliff W – 02.09.2018

This was intended as a quick test of new features, but it generated some very interesting ideas and insights. Here, we describe the order of the scenes tested, insights won, and finally, draw conclusions. Cliff has alternating strabismus.

| Please be aware, this is an informal report of a single (unusual) user test. I am deliberately not using scientific language. We are still gathering experience with the help of our participants to design the right set of tools and the right experimental design to enable the delivery of a rigorous scientific assessment of effectiveness. |

Could see with both eyes independently at the default size/luminance

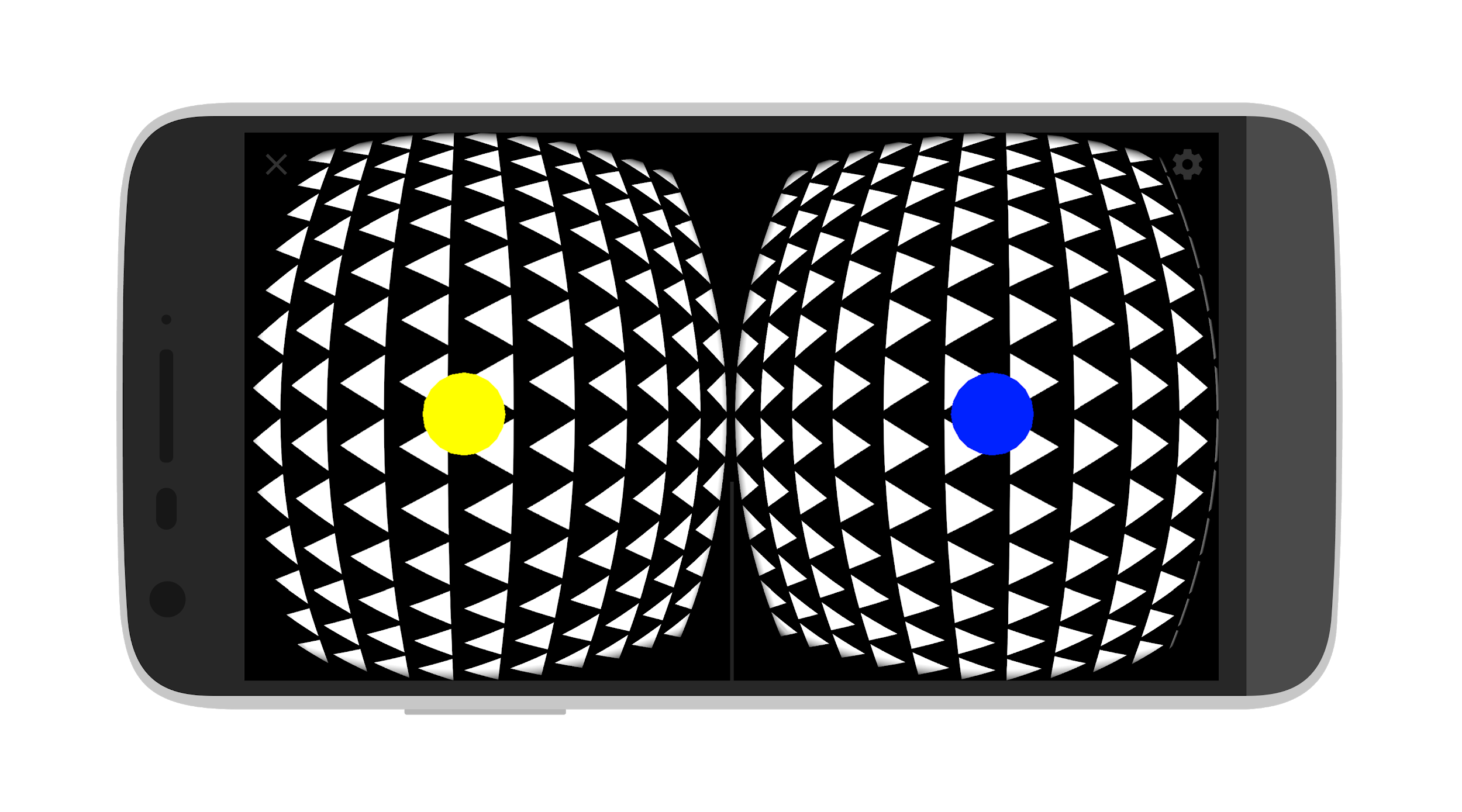

Binocular suppression test with conflict

When testing without conflict, Cliff could see the arrows pointing left at the top of the perceived screen, and arrows pointing right at the bottom.

For the first time, with Cliff, we activated the conflict mode.

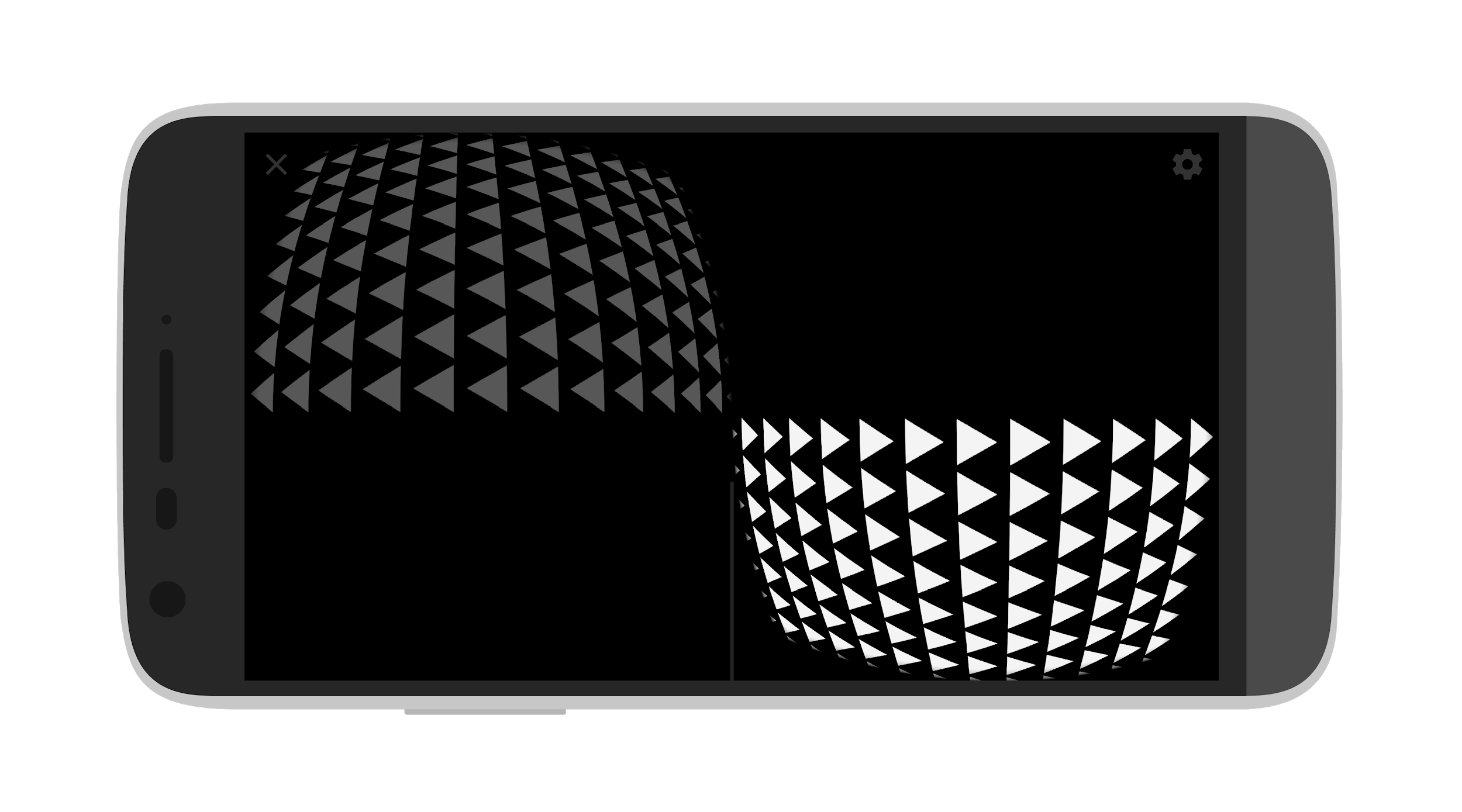

Unlike others who experience the complete suppression of an eye, Cliff reported seeing a row of arrows pointing left at the leftmost extreme, followed by three rows pointing right, followed by a central section with arrows pointing in either direction (but not overlapping), then three rows pointing left, and the outermost row pointing right. I have attempted to draw what he perceived below :

![]()

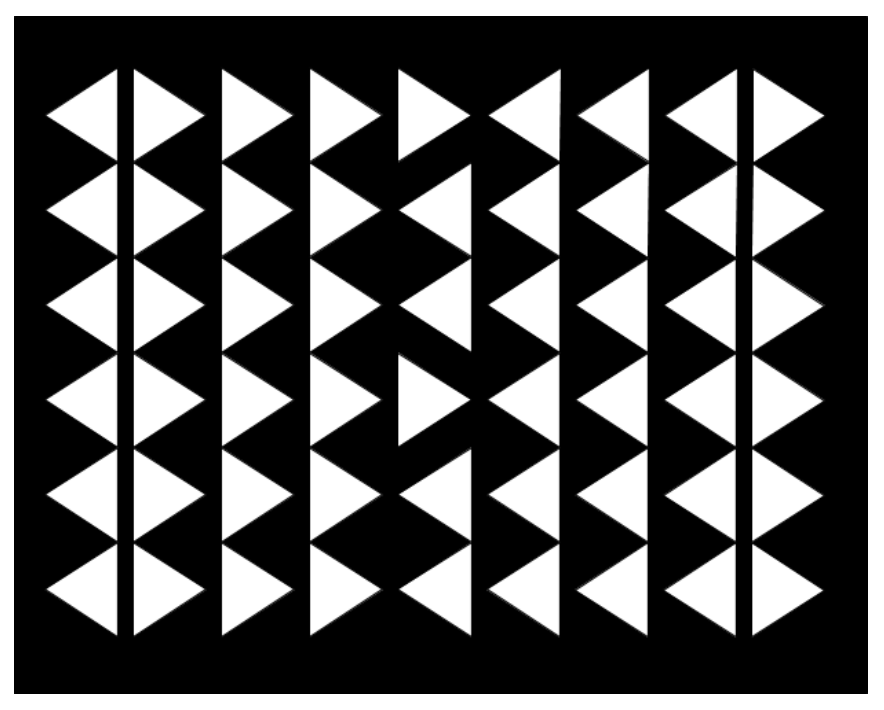

Even when altering the suppression ratio, Cliff never sees any overlapping stars. Very unexpected. We are not able to forcibly break suppression. He is also suppression non-overlapping regions of each eye :

![]()

Eye misalignment

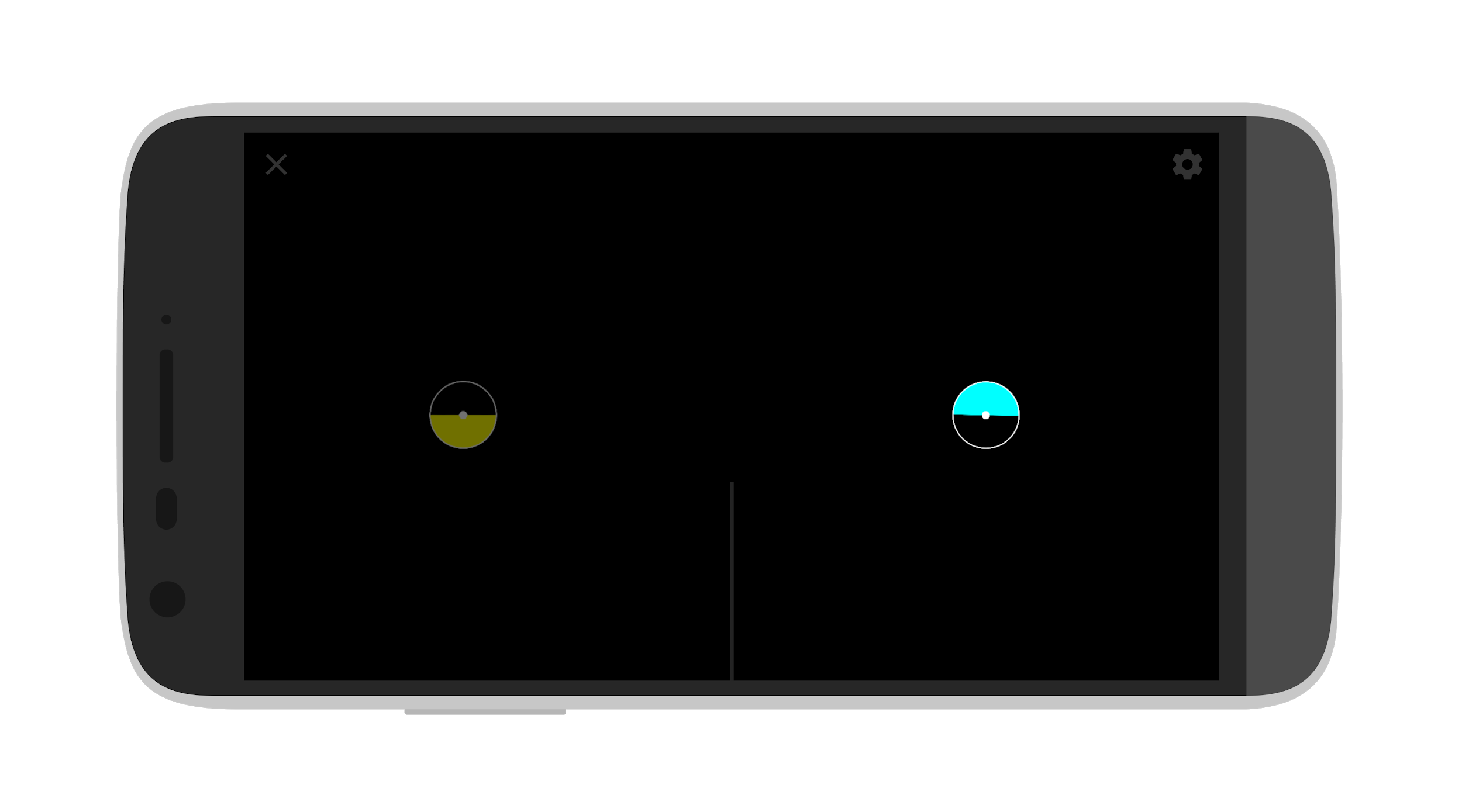

Fixing with his left eye, he is able to see the yellow filled circle. With his right eye, he is aware that something is present, but he cannot identify it (however, this may be at a “logical” level).

Improvement: We need to be able to manually override the luminance ratio in any scene (i.e. add a new micro-controller).

Binocular suppression (second attempt)

We realised through the eye misalignment test, that Cliff hadn’t been wilfully fixing with one eye during the first binocular suppression test. We repeated it, but this time Cliff fixed strongly with his left eye. My goal was to setup a suppression ratio which would be suitable for him in a repeated eye misalignment measurement wherein he would be fixing with the left eye (as I didn’t have the ability to override this setting manually).

In this case, with conflict on, Cliff was only seeing arrows pointing left. As he altered the luminance and dropped the brightness of the leftward arrows, his fixing eye would suddenly and autonomously jump to the right eye, where he would only see right facing arrows.

With a great deal of willpower, he could continue to see the left facing arrows until it became very difficult (they became very dark) but at no point did he see overlapping arrows. We did not break suppression, even with one eye fixed.

This was an unpleasant surprise for me. Although Cliff seems to have a unique set of abilities to our other test participants, I had nevertheless assumed that our “brute force” approach to image suppression would still work. My hypothesis at this point, is that suppression seems to act at a higher (more abstract) of processing than for most. He often reports being aware of objects which might be there, but he “cannot bring himself to care” (i.e. to recognise/identify).

Depth Perception

To verify that Cliff was unable to fuse I ran the depth perception test, where he has to pick the “closest” of the four circles. We randomly bring one “closer” (on the basis of vergence, in which we maintain identical object dimensions) than the others, by decreasing amounts with each attempt.

He reported only seeing four circles, which was already suspicious given that we hadn’t succeeded in setting the eye-misalignment. For this reason I had added “binocular cues” in advance above and below the diamond. Unfortunately, he was able to see both simultaneously. I suspected his “partial” suppression was active again. Perhaps he was seeing some circles (and the binocular cues) with one eye, and others with the other.

At any rate, it is almost impossible to judge which circle is closest without using both eyes on a circle simultaneously.

His results were (T:true,F:false) : F,F,F,T,F…. followed by a string of false answers, and at that point giving up. This seemed to support the hypothesis that he was suppressed.

Improvement: We couldn’t check whether this suppression was “partial” or “whole eye” unfortunately. We need to enhance the circles to include binocular cues (e.g. a cyan spot at the top for the right eye, and a yellow spot at the bottom for the left eye) so we can ask for more details about what it is they are perceiving.

Alternating Fusion

The alternating fusion test was created specifically for Cliff on the basis of our previous experiences with him. In our very first session, I spent some time with him trying to get him to alternate his fixing eye as quickly as possible. This was only one of many exploratory practices, which may or may not have had a significant impact. Nevertheless, at the time it had a profound physical effect on him (from hairs standing up on end, his skin flushing, and a feeling of extreme exhaustion afterwards) and his eyes (his alternation became so fast that his eyes effectively maintained their straightness for a period of tens of seconds, while he was simultaneously able to verge with both eyes upon an object we moved closer/further away from him). The next day he found he was able to will himself to look straight, and was suddenly able to perceive depth.

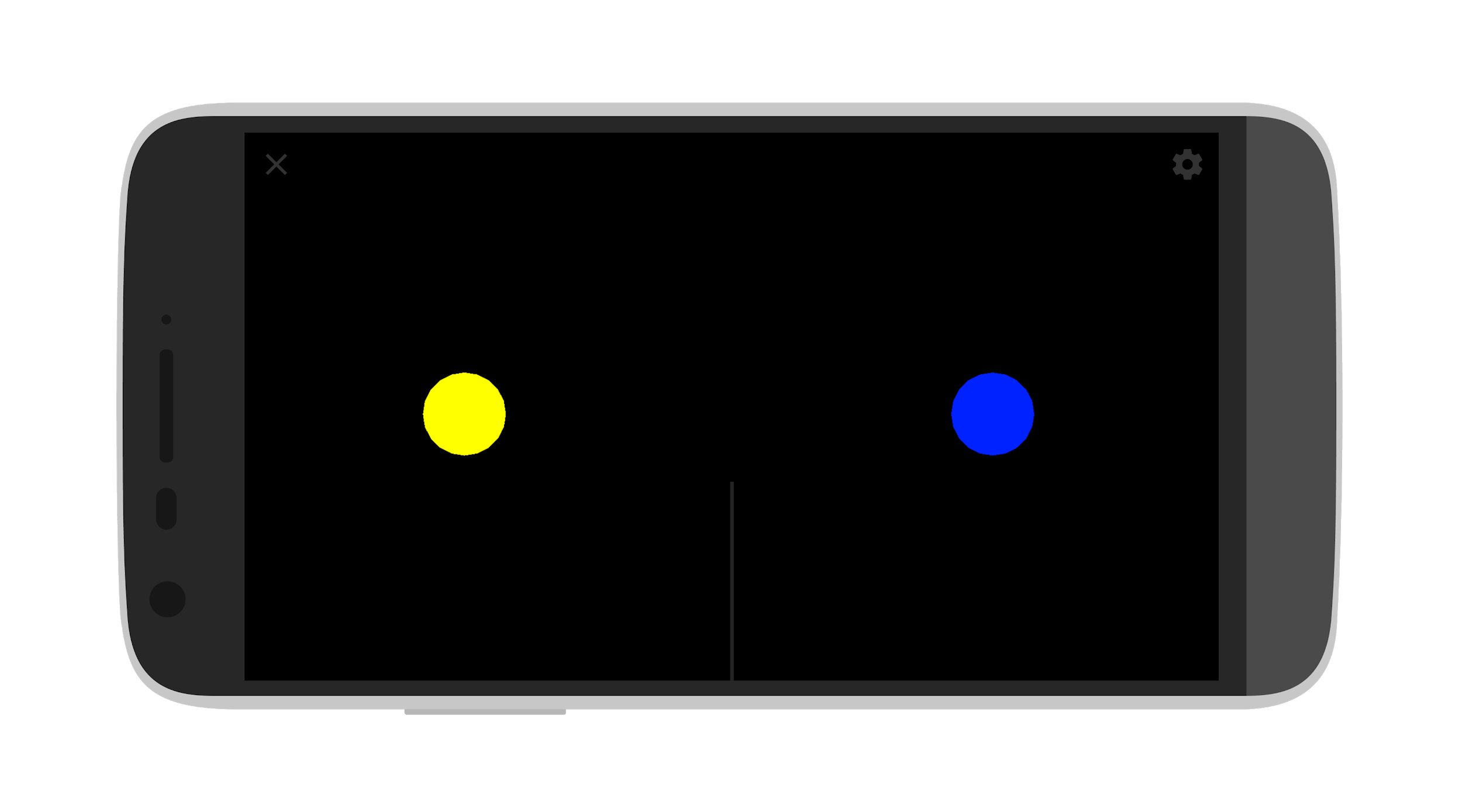

When we started the alternating fusion test, I did so without activating conflict:

Cliff was able to see both yellow and blue discs. Unfortunately, my hurried notes (this experiment was done in the late evening just before a business trip to Korea when I ought to have been bringing my children to bed!) I do not have a record of whether he perceived the two dots at the same position.

With conflict activated :

… there are two possible ways Cliff could perceive the scene. When attempting to relax and look centrally (without willing any fixation) he could see an impossible colour at the center – which implies that both eyes were straightening up.

As soon as he attempts to fix one eye or the other, he will see only that colour, and the location of the disc will jump around. We cannot say more about this until we have developed inward facing cameras within the headset to precisely relate eye movement to the scene.

At 4Hz, I noticed that Cliff was moving his head from side-to-side. He reported that it was an effort, and quite crazy. At 9Hz, if he fixed with his left eye, he would see mostly yellow with flashes of blue (but at the same position, as if the disc was changing colour). This implies straightened eyes. We pushed on to 15 Hz, but I’m afraid my notes are too scant to reconstruct what happened (I am first able to write this up over a week after the test).

Improvements: The blue disc needs to be cyan for consistency.

| By this point I felt exhilarated (with my science hat on) that we appeared to be identifying the phenomena that Cliff experiences more clearly, but also somewhat defeated (with my engineering hat on) that the features we had built seemed inadequate to get the effects that we had anticipated. At this point, some very unexpected and very exciting things suddenly started happening. Again, I am not sure if this has to do with the alternating fusion scene, but it seems to hint that we need to explore that connection more rigorously. |

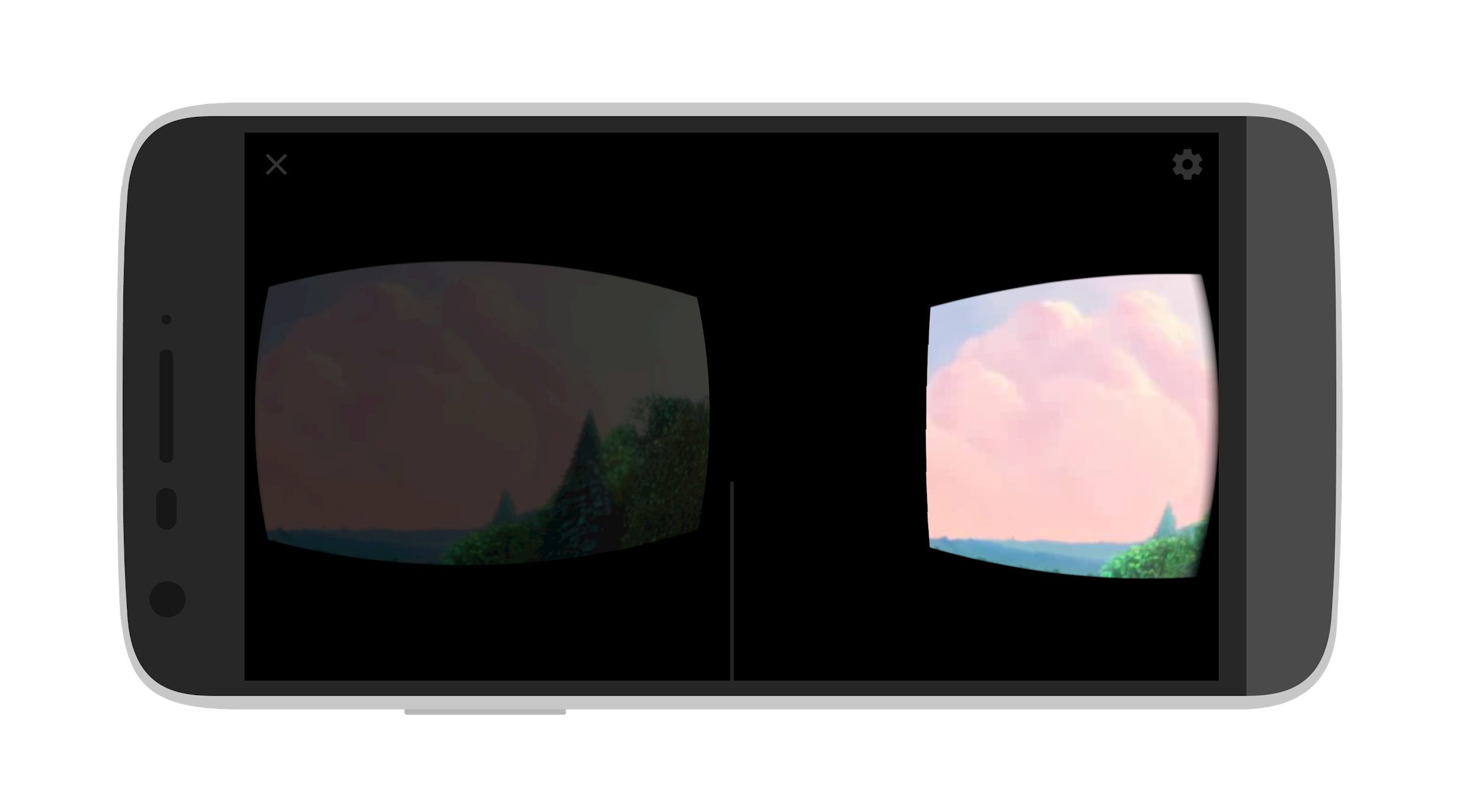

Video Eye Straightening – First Attempt

At this point we still didn’t have a working “misalignment angle”, which made it seem a little pointless to attempt eye straightening. I still wanted to show Cliff the two new experiences we’d build (video and “hyper reality”) so we proceeded anyway, just to demonstrate to him the direction we are moving in (without proceeding first to the standard eye-realignment scene).

The goal of the video eye straightening scene, is to allow the participant to relax whilst watching a video positioned and manipulated so that they can fuse it. As they watch it, it gradually rotates and manipulates the image processing of the part of the video seen by the misaligned eye, such that their visual system gradually straightens the eye to maintain fusion – at least, that was our theory. I didn’t think we’d get a chance to try it out here, but I was wrong – as you will see later.

| Unfortunately, I am writing this up in a bunch of different coffee shops across Seoul as I move about and find five minutes here and there. I can’t generate the correct screenshots right now, so the screenshots you are seeing are taken from unrelated experiments just to give some impression of what we’re talking about. |

We have a mode which allows “blinkers” to be added to the scene. These are horizontal black strips occluding the video image. The left eye sees only the bottom half of the video, and the right sees the upper half. This avoid the brain receiving a conflict signal from either eye. Low-and-behold, with the blinkers on, and with a little concentration, Cliff could see the entire video as one.

It was interesting to here his feedback that:

| “Breaking suppression feels wrong!” |

This is not surprising, given that the brain associates the use of both eyes with seeing the wrong thing and potential danger.

As we continued, however, we noticed some interesting and unexpected effects. First, we removed the blinkers which introduces suppression, as both eyes are now placed in conflict.

When Cliff chose to consciously fix with the left eye, at first his right eye would fall to inwards towards the nose to its usual (esotropic) position. Strangely, however, not only was his right eye not completely suppressed (he was seeing a partially suppressed mess), his right eye started to pull towards a straightened central position, resulting in (we believe) a fused image.

He described this in these terms:

| “It still feels wrong, but now I can see that it’s awesome! It’s proving to me that it is not wrong, that it’s right for the eyes to go straight” |

I hadn’t expected this, at all. Clearly, something had changed, he was accessing abilities he couldn’t just minutes before. On a hunch we re-ran the eye misalignment scene.

Improvement : we must be able to more accurately tell whether or not the perceived image is truly fused, or just monocular. I think an additional generic mode is required, to place colour filters over each virtual eye – that is, to render only red components of the image in one eye, and blue/green in the other eye. This means that, without fusion, the video will either look red or cyan. Only with full fusion will the participant report seeing the full colour range.

Eye misalignment – second attempt

We had now settled on Cliff attempting to keep fixation with his left eye to introduce consistency (the difficulty with alternating strabismus being that the fixing/lazy eye alternate unpredictably otherwise).

Something profound had changed, as Cliff immediately saw both misalignment indicators separately. He then moved his head to bring them into same position, and immediately reported seeing shimmering colours. These are the “impossible colours” caused when each eye sees the same shape having a different colour – and is a sure sign of fusion occuring. The measured misalignment angle was now indicating esotropia in his right eye. This was more than just interesting.

We quickly jumped back into the video realignment scene, but this time preserving the newly measured eye misalignment angle.

Video realignment – second attempt

This time, Cliff could see the video in its entirety absolutely perfectly from the very beginning. Clearly, the measured eye-misalignment was now placing the video precisely in the center of each eye and he was able to fuse them.

At this point, I activated the automatic straightening. From the practitioner console, I could see the video shown to the lazy eye straightening and asked him if anything was changing. Cliff reported no change. He was happily watching the video. I kept repeated the question until the console was showing the two images in the headset were perfectly straightened. At no point had Cliff noticed the alteration in alignment, or perceived any effort on his part in straightening his eyes.

To test that his eyes were indeed straight, I then (without warning) reset the playing video to its original misaligned position. The response from Cliff was perfectly logical.

He almost jumped out of his seat and said “Wow. Something changed! What changed! It’s all the same, I can still see everything, but something suddenly changed!”. By resetting the video position, his straightened eyes were then (likely) perceiving different images, causing conflict and the immediate falling of the right eye to the esotropic position – where the video was waiting, correctly centered for a misaligned eye. This happened so fast that Cliff was aware of a disruption, but in effect, the video went from being centered in a straightened eye, to being centered in a misaligned eye, so quickly that he couldn’t perceive the transition.

I repeated this entire cycle three times, with the same effect each time.

Improvements : We need the ability to activate audio cues when the scene seen by both eyes has completely straightened. At this point, it would be very interesting to have the participant repeatedly close and open both eyes, to see if they are able to immediately regain fusion (without the eye falling to one side). This would open the door, between blinks, to remove the headset and see if they can maintain access to fusion when looking at the real world without any intervening medium.

Hyper reality realignment

I decided to then show Cliff the experimental hyper-reality setup I’d built. This streams the world, as-is, from the phone’s front-facing camera. It then manipulates it to take into account eye-misalignment.

Without the blinkers, Cliff could immediately fuse, and the eye straightening worked as effectively as in the video – although Cliff was simultaneously interacting with the world around himself.

One unexpected event occurred when I activated the blinkers, however, and this is particularly fascinating. With the blinkers on, it ought to be removing conflict and allowing him to create a single image even more easily (as occurred in the video realignment experiment, or the binocular suppression scene earlier). Something was different here, perhaps because of the relation of what he was seeing to physical reality itself. With the blinkers on, Cliff reported seeing a panoramic field. This means that he perceived the image the left eye was seeing as being located truly to the left of the image the right eye was perceiving. This may well suggest that, over the years, Cliff’s brain has also learnt to be able to work with the input from both eye, taking into account that they are looking in different directions. This kind of effect has been anecdotally written about – but this opens up a chance to do more precise experiments.

Depth perception – second attempt

By this point I had goosebumps. Something had shifted in Cliff’s perception and the eye-realignment was working exactly as I had hoped. There was one more thing I felt we needed to try. The depth perception test had been a dismal “failure” in the sense that Cliff hadn’t been able to fuse and therefore perceive depth. What would happen if we attempted it again, using the new misalignment angle and after the kind of stimulation his mind had received in the intervening time?

We re-ran the test and his results were:

T,T,T,T,F,T,T,T,F,T = and 80% success rate. That is no fluke. The last half of the test have such a subtle distance difference that even perfectly sighted people struggle.

Cliff was not only fusing, he was suddenly able to intuitively perceive distance. Incredible.

Improvements : I think we need to extend these tests to ask the participant to sort all four in order of depth. This will give us a less binary insight into their depth perception abilities.

Conclusions

I think there is plenty of evidence here to suggest we are on the right track to re-animating binocular vision in some subset of cases. This once again show the importance of testing often and testing early – it’s now clear what the next set of functionality needs to be.

Improvements : Perhaps the most important next step we need to take is to take reliable, time synchronised, footage of the scenes they are looking at, the precise position of their eyes, and their audio commentary. This implies that, at a minimum, we must find a way to embed an inward facing camera which can record the position of the eyes.

Ideas:

We are still at the stage where we need to falsify the hypothesis that none of this actually works. I am seeing its effectiveness, but this is not enough. We need to discover more about when/for whom it works, and to what extent. We need quantifiable numbers so that we can measure improvements, or catch regressions, in performance on the basis of re-designs.

We need to design a process which objectively results in measurable biocular/binocular/depth-perception/straightening – or fails to do so. If this process can also uncover an optimal ordering with which to approach the problem, or a set of tools to help stimulate the visual system into better performance, that is all the better.

It therefore seems that we need a testing “worse case scene” WCS process which exposes the lack of binocular vision, and eye misalignment. This will probably involve a chaotic background scene with a grid of randomised partial images (split across both eyes) in three horizontal rows (to cause maximum potential overlap/conflict).

It seems that we must also answer the question of whether, when the eyes are not in conflict, they also misaligned? Can we measure misalignment FIRST before doing suppression testing? If we have horizontal blinkers activated, can we get them to align top and bottom parts in a central position? Can we compare that mis-alignment angle with one generated from a scene where there is conflict?

We would then be able to approach the WCS from the perspective of non-conflict with eye-misalignment already factored in. From non-conflict with eye-misalignment and binocular suppression ratios factored in. With conflict and all compensations activated.

Once these steps are exhausted, we could then cycle between Depth-Perception and a stimulation step.