Todays user test was absolutely fascinating. During our initial tests we established that Mr C has a less common form of strabismus than most – alternating strabismus. This is a situation we consider “beyond our initial design specification” but we continued and were rewarded with shocking surprises and inspiring insights!

Background Information

Mr C

Age now : 20s

Diagnosed with strabismus : around 2 years old

Wearing glasses since : around 2 years old

Therapies : Patching between four and five years old

Surgery : Corrective eye surgery at ages ten and sixteen.

Eye strength : hyperopic (long-sighted) around 1 Dioptrien.

Intermittent strabismus? : Permanent strabismus

Alternating strabismus? : Yes

Physical tests

Please see this post for more information.

Commentary

Mr C has accepted his strabismus as an immutable fact of life, although we discovered several signs that this may not be so. Here we discuss some of the most interesting test results and insights.

Fixation test

During the very first test, asking Mr C to focus on my nose so I could see which eye was strabismic, he mentioned that he could choose that eye. In a totally relaxed state it tends to be his left eye which turns in (this is called esotropic), but with a will, he can fix his gaze on the object (my nose) with either eye, causing the opposite eye to immediately turn in. Will power is an incredibly important factor in strabismus, as this is the basic mechanism available to use to overcome all manner of mental resistance and self-suppression.

In the case of alternating amblyopia, the brain is suppressing one or the other eye. It is normally reported that their is no sensation of this happening, however Mr C mentioned that he has a sense of which eye is currently active. This is already interesting.

Alternating strabismus is also a great example of why simplistic models explaining strabismus as a muscular problem, are wrong. Either eye can position itself centrally. Our motility tests showed that both eyes have a full range of movement. The muscle itself is not hindered in any way. The issue is neurological – in how the eye is being controlled by the brain.

Motility test

In the motility test we asked Mr C to follow an object as it was moved in various patterns to check the range of movement of his eyes. This was conducted with both eyes simultaneously and glasses on. Whilst his eyes could follow the object, the tracking of the object was jerky. At the extremes of movement the lazy eye (which would be initially trailing the good eye) would catch up with the good eye and position itself at the extreme of the eye socket.

In one fascinating moment, however, Mr C’s eyes suddenly started to flicker wildly as the fixation object was held centrally, and around 20cm distant from his face. He mentioned that this was “mean” and we asked what he meant by this. He replied that it was tiring. This is an effect which has been mentioned elsewhere (for instance in “Fixing my Gaze” by Susan R. Barry”) of how the world can appear to “shake”.

We later realised that Mr C tends to fixate with the left eye when the object is on the left side of his face, and with the right eye when it is on the right side. We hypothesise that, after these wide ranging test movements (crosses, diagonals, and circles around the face), the positioning of the fixation object statically in the center of his vision caused his brain confusion over where it would then move, and which eye ought to fixate. The brain then rapidly alternated between trying to fix with the left and right eye, causing the “shaking” which Mr C reported as quite stressful.

Brock String and Binocular Suppression test (app)

The brock string test brought more surprises. A brock string is a very simple tool consisting of a long string with three differently coloured beads on it.

We quickly established that only one eye was active at any given moment (Mr C could only see one string going into and out of the central bead while he fixated upon it) but, again, he could choose which eye to use to fix with.

In discussing this, however, our naive view of suppression was blown to smithereens. Mr C couldn’t understand at first why he ought to see more than one string at a time. It was explained that, with both eyes active simultaneously but focused on the central bead (even if the lazy eye were failing to focus on it) the string would be being seen from different angles too extreme for the brain to fuse, so it would appear as two strings. As such, one eye is “switched off”. He thought about that for a moment, and (to paraphrase) said “no it’s not. It’s not that simple.”.

What Mr C went on to demonstrate, by covering the currently lazy eye with his own hand, was that his total field of vision was reduced when the lazy eye was covered. After several attempts to understand this, we have come to the conclusion that his brain is suppressing all overlapping portions of the image (anything seen by both eyes at the same time) in favour of the good eye, but is not suppressing any signal from the lazy eye which is not overlapping (i.e. seeing different parts of the world because it is located on the other side of the face.

We were not aware (and have not come across) the idea that suppression is non-binary in the case of esotropia. There is some anecdotal evidence that, in the case of exotropia (the lazy eye falling outwards rather then inward) that the brain can produce something called “panorama vision” (an extended field of view perhaps similar in nature to herbivores with eyes mounted on the sides of their heads) but this shook us deeply.

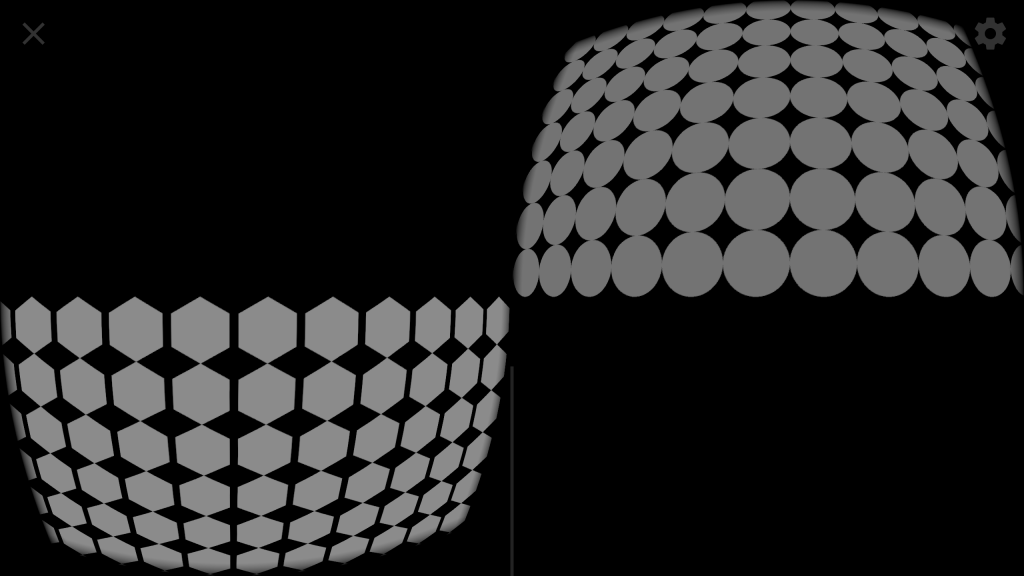

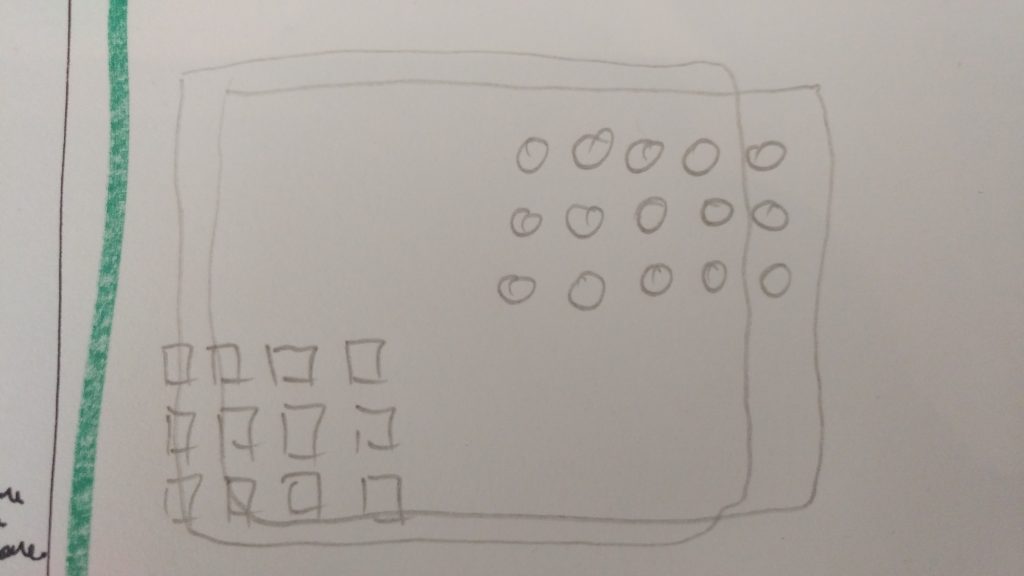

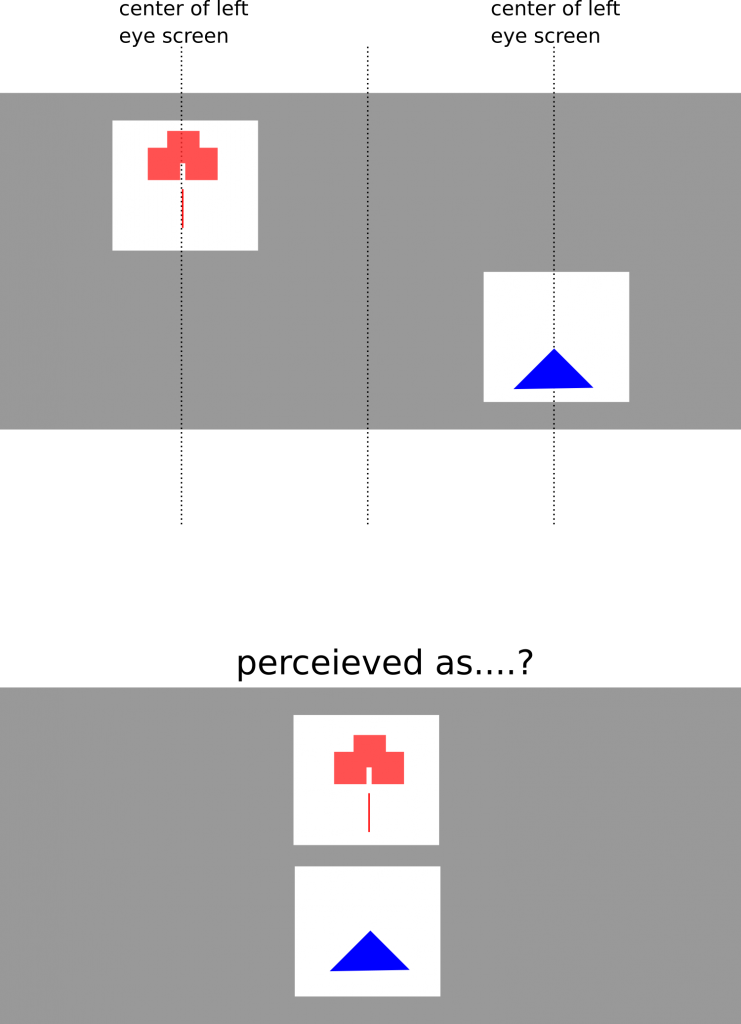

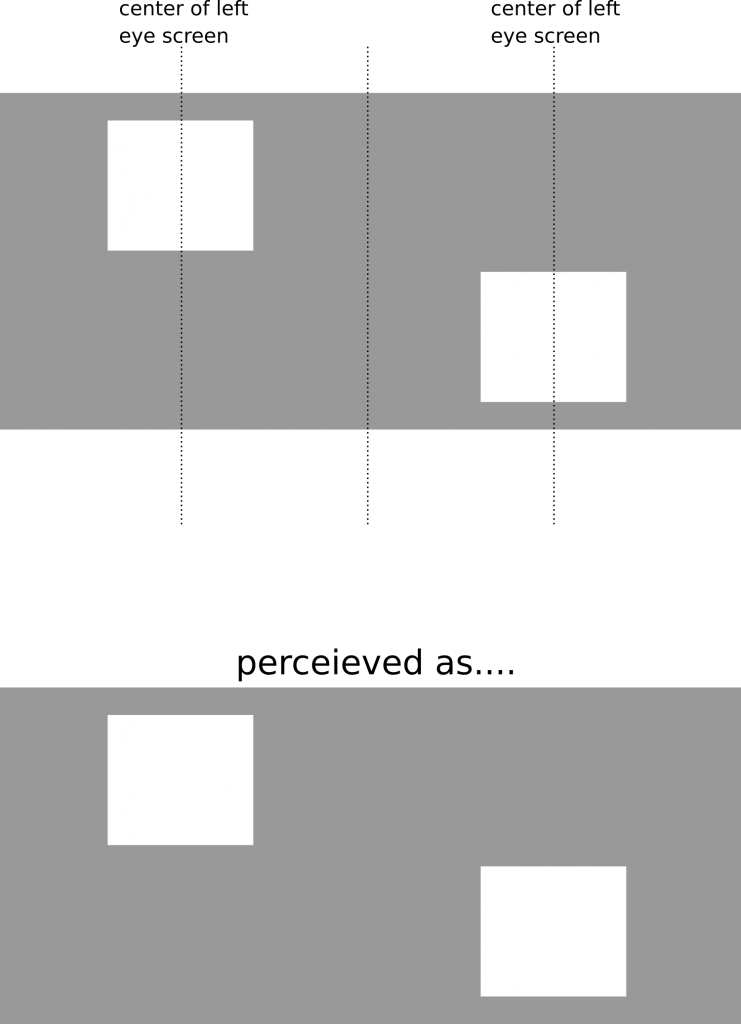

The view that this was, indeed, really happening, was reinforced by an unexpected oddity in the binocular suppression test in the app. The binocular suppression test presents two non-overlapping (distributed in the vertical axis) tiled grids. Each eye sees only one of these grids. Initially these grids start off in a neutral state, each with an equal image intensity as below.

Because each eye sees each side independently, they should appear more or less (depending on the angle of the lazy eye) above each other.

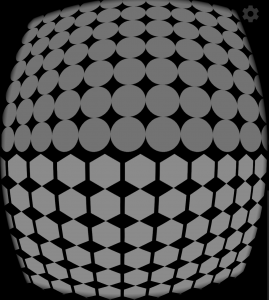

The idea is that the user can look upward, or downward, and the relative intensity of the image delivered to each eye changes, as below :

The goal behind this is to find the point at which the suppression of the lazy eye can be overcome by a much stronger signal relative to the good eye, such that the signal arriving at the brain is of equal strength (albeit dim) forcing the user to see both images (probably seeing double) so we can start to work on fusion. This is not what happened at all!

In the case of Mr C several incredibly interesting things happened. He reported that he could see both sides at the same time, and we could observe that both grids were at an identical image intensity. We later realised this is also because, as far as each eye was concerned, they were not overlapping. The brain was allowing both image signals to be processed simultaneously because it was not detecting conflict. The neuroscience tells us about cells specialised for the detection of interocular conflict, and has experimental evidence to suggest these are responsible for suppression. It could well be that this is what we are seeing.

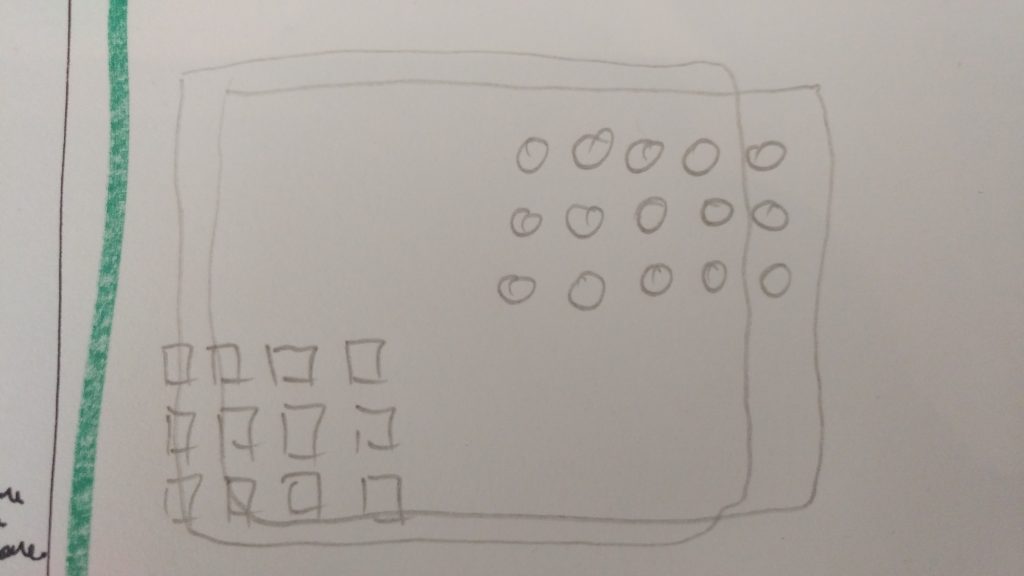

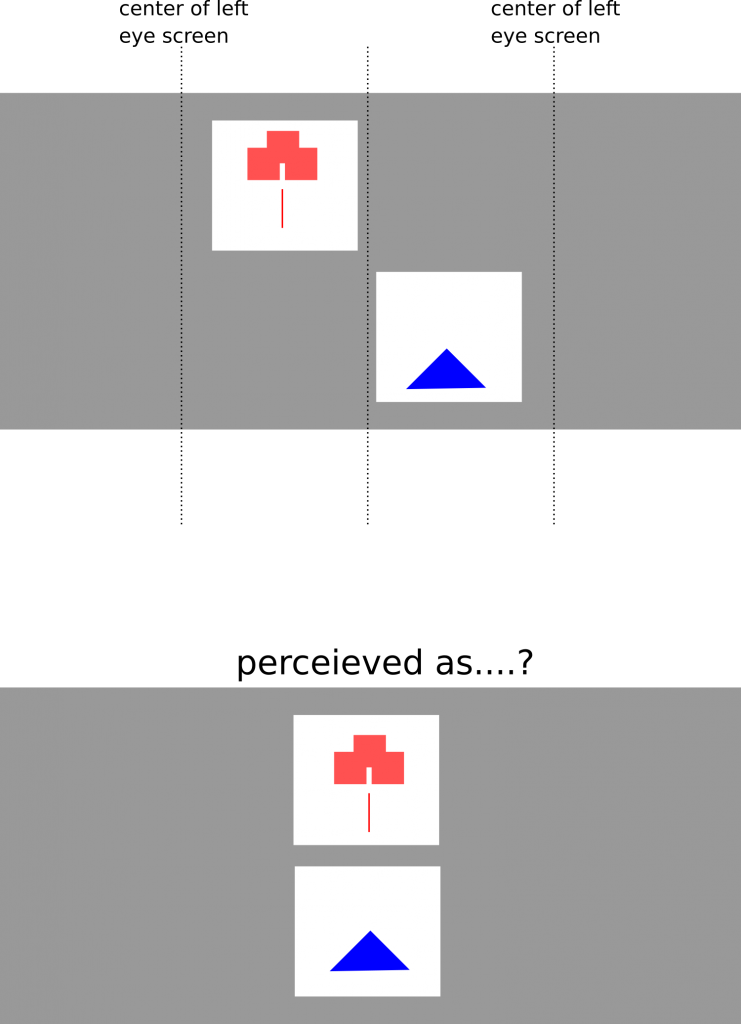

Mr C then drew what he was seeing. Initially I (personally) simply didn’t want to accept that what he drew could possibly be true – despite his insistence, but in retrospect, it does make sense. This is what he drew, to show what he was seeing:

It does seem to suggest he brain may be reordering his visual input to create something very similar to the “panoramic sight” reported by some people with exotropia. It is unlikely to be simple double-vision as the distance between the two grids is far larger than his actual maximal angle of misalignment (which is around 14 degrees). We will be thinking long and hard about how to explore this, and how it could potentially be a gateway to helping train fusion.

Strabism detection (app)

Once again, we gained unexpected insights into Mr C’s visual and mental flexibility.

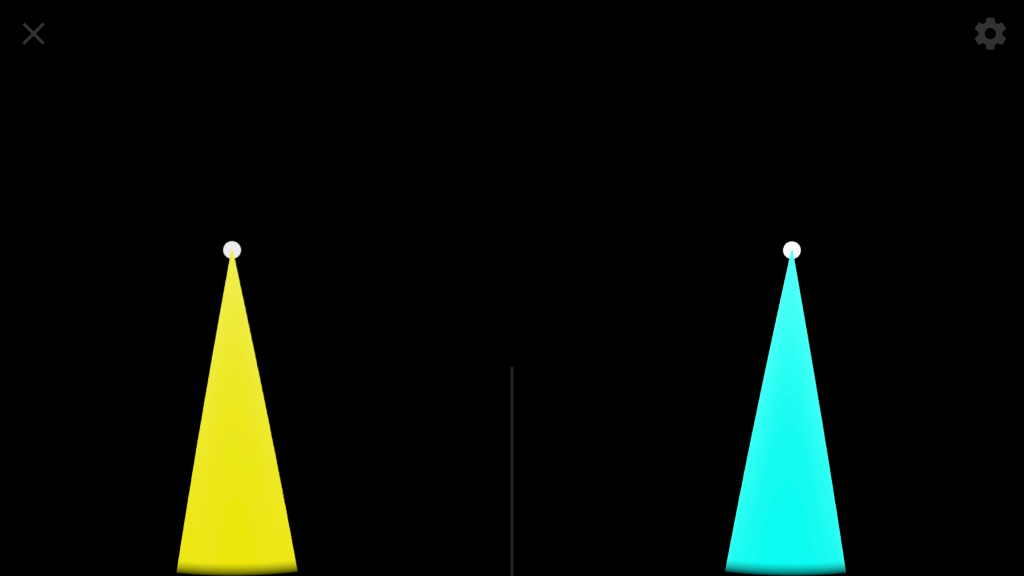

The strabism detection scene in the app could not be simpler. It is nothing more than a rod going off into space (it looks like an elongated cone) with a sphere towards the end (a circle on top of the cone). The left and right cones are shown in different colours (yellow and blue are chosen to avoid common problem colours for colour blind people). We position the virtual cameras with a PD (Pupillary Distance) of 0 so that both eyes receive exactly the same image. This scene is designed to inherit the “suppression breaking” luminance ratio detected in the Binocular Suppression calibration test previously mentioned, but in the case of Mr C there was no difference in ratio.

What we would expect the user to experience, were their eyes perfectly normal, is that the two cones overlap. This creates a ‘virtual’ colour unlike anything which can be physically represented, as the brain decides which eye has dominance in which portion of the image… it is as if the cone is shimmering (an effect of binocular rivalry, here’s a simple example experiment you can try at home).

What we would expect a strabismic person to experience, even with alternating strabismus, is that they see one colour or the other because one eye is suppressed. This is indeed what happened, but then, we revisited this experiment in the light of what we had learnt from the binocular suppression experiment, that theoretically he might be able to fuse as long as there were no actually conflict in signal between the two eyes.

This time, Mr C exerted his will power and tried to use both eyes to see the cone. Initially he reported is switching colour (as the dominant eye alternated) then, suddenly .. White! He repeated this a few times, and “white” became “a mixture of the two colours”. This is particularly interesting. Did Mr C just achieve fusion? It seems that way. Was that first fusion event producing a signal so strong (due to the overlapping signal of the two colours) that it was at first perceived as “white” (i.e. maximum brightness) but with only a little time, more finely differentiated by the brain into the underlying colour components?

After we had finished with the tests, and were just chatting about what had happened (elements of which had surprised everybody involved, including Mr C), Mr C recalled that he had experienced a similar sensation to that he just experienced in the strabism detection scene, when somewhat intoxicated and very relaxed at a party in a forest the previous year. He could remember seeing things in a way that “felt different”, spending hours exploring it at the time. This is very anecdotal indeed, but similar to many other anecdotal experiences of strabismic people breaking suppression, fusing and suddenly perceiving depth. Depth is reported as a sense, a feeling. Sometime those people are not aware that they are using both eyes, just that the act of seeing feels different.

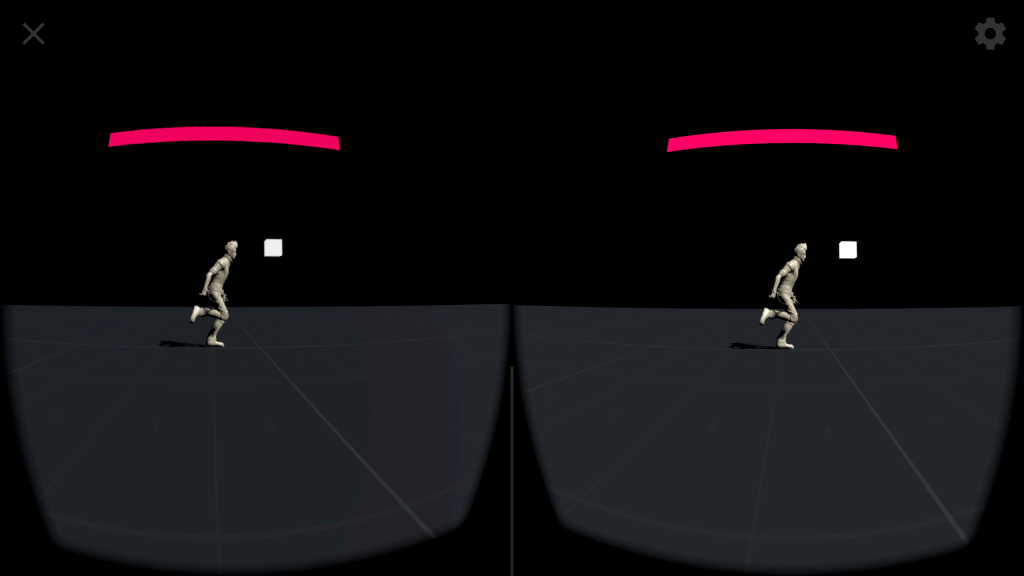

Endless Runner example game (App)

A short note on the endless runner. After some initial confusion, Mr C got the hang of the game dynamics (to jump over or duck under blocks which can damage the player, or to run through blocks which don’t). Blocks which are damaging are blocks only seen by one eye, and blocks which don’t damage you (but increase health) are seen by both eyes.

Mr C did quite well, but admitted afterwards that he cheated by blinking each eye open/shut to determine which blocks were in one eye, and which in both. He managed this until the automatic speed-up in the game made it impractical. This is exactly what we expected players would do, and exactly why we built in a speed up, but Mr C really brought it home to us. As a strabismic person, he has always been making sure he doesn’t show any disadvantage, and intelligently “tricking” his way through social situations which might otherwise penalise him for his lack of depth perception.

One last idea, and one last surprise

By this point it seemed quite obvious that Mr C has the potential to fuse both eyes. The underlying problem may simply be that, so long as one eye is always misaligned, his brain will not have the chance to realised that it has the choice to straighten both eyes and use them simultaneously. He has learnt this coping mechanism, and used it all his life. How might it be possible to produce experiences which guide his brain towards the insight that it need not fear activating and combining the input from both eyes at the same time? I will write a few words in the section below about how we might achieve this guidance in the app, but at the time, I remembered what happened during the motility tests.

During the motility tests Mr C had a moment where his eyes started to shake because his brain couldn’t decide which eye should fixate (I say “his brain” because it isn’t a conscious decision in that moment). Remembering this, I wondered, might it be possible for him to alternate between eyes so quickly that neither had the time to fall aside? This would lead to both eyes looking straight ahead simultaneously – might that offer a window of opportunity for the brain to have a break through and begin fusion?

We filmed Mr C as he attempted to fixate on a point and alternate as quickly as possible. His alternating became faster and faster to the point that it really was a “shaking” and his eyes (although juddering) were both straight. I believe we could also see how deep routed his mental resistance/habituation is to this – by observing his autonomic response. His skin blushed, the hairs on his arms were standing up on end, and he looked as though he would vomit. It was a huge mental effort.

We were using the brock string to conduct this little experiment and I suddenly had the idea to move the bead he was fixating on towards his face. Both eyes followed the bead, it looked like vergence. Is there a way to exploit the alternation of the eye to open up the mind to the possibility of exploring fusion, but in a less stressful manner? Could something like this be a part of the game design within the app?

Insights, Ideas and Conclusions

Alternating strabismus

Perhaps there is more we can do with alternating strabismics than we guessed. We assumed that it would be a very difficult type of strabism to deal with, and it is certainly complex, but this first trial indicates it might be a situation in which the player has many of the building blocks already in place and ready for fusion (as both eyes are being used constantly, in one form or another [partially to provide extra environmental information, or as the fixating eye])

Managing feedback

One key understanding, is that the calibration scenes (such as the Binocular Suppression scene) can be perceived in more ways than we initially expected, and that these perceptions can reveal more about the type of amblyopia/strabismus the person has than we first realised.

Probably the best way to deal with this is to spent more time offering selectable choices in the manner of a classic “Expert System” to understand what the user’s perception really was. For example, in the binocular suppression scene, perhaps we should start by simply showing the neutral state without any user interaction. We then ask first what they see… Is one brighter than the other? We might then pick an eye and allow them to choose from a range of possible perceptions they may have had – e.g. did the grids appear vertically aligned, non-aligned (as in Mr C’s drawing), overlapping etc.

Game philosophy

Compensating for disadvantages is an entirely normal human behaviour. It is, however, a pattern which may run contrary to the person’s own best interests in a training context. For this reason we have learnt that game environments should, at least initially, be entirely non-confrontational. There should be no “right” way to do anything, but the opportunity to sensitise and explore one’s vision, until one is ready on one’s own terms, to “put it to the test” in a game environment. Once in a game environment, however, a competitive angle may well be the tool to bring the person’s will power into play, to win by solving the puzzle, surviving, or overcoming…

The power of the community

It is almost frightening how important real feedback from real sufferers is… and the more feedback there is, the more powerful it can become… however, it is difficult enough to process, and digest, individual cases let alone the torrent of experience generated by a community. For this reason, it must be a central priority of EyeSkills to make the analysis of community experience manageable. Our current approach is to apply A/B testing mechanisms, but at this point, I can only say that we will need to spend more time worrying about it because the potential increase in the quality of the experience we can offer is so rewarding!

How we might improve the endless runner

This first test has generated a wealth of idea, and these ideas are testable. Here are some of them:

Underlying space mechanics

With Mr C we seem to have seen a phenomena where non-overlapping content being presented to each eye not only failed to trigger suppression, but was also contained within a “panoramic” field of view.

This immediately raises two interesting questions.

In the absence of a panoramic view (where the central position shown to each eye is also vertically aligned) wouldn’t this lend itself very well indeed to a vertical split screen?

One example scenario : I could imagine a space invader variant where the ships coming down from above burn up in the atmosphere (i.e. do not penetrate into the lower screen space and thus avoid overlap) whilst their instantaneous shots are presaged by a glowing power up (we do not want projectiles violating the divide either – they should be something like laser strikes).

In this way, the player might avoid suppressing either eye, we can apply eye misalignment to vertically align the top and bottom spaces (from calibration) and the brain can get to work learning how to fuse the input from both eyes while not suppressed.

What happens if that virtual space is offset (due to a panorama perception as in the case of Mr C) that there is no vertical alignment?

If we shift the virtual center of the scene perceived in each eye inwards, do we then achieve vertical alignment in the panoramic setting?

This would be a similar case to the strabismic detection, in which Mr C achieved fusion as suppression was NOT activated by his brain because (perhaps) there was no conflict in either eye despite two objects occupying the same “virtual space” in the mind. Perhaps the stress he experience was more due to the fact that he was mentally having to shift the two images towards fusion due to his panoramic view – a situation we can overcome by shifting the viewing areas for him.

In terms of the Eye Skills framework, the key learning is that we may also need to provide a facility to detect the need to (and actually) reposition viewing spaces with such an offset that it can support “panoramic” style viewing.

Playful opportunities to learn sensing

Getting the player involved in their own therapy is the key… after all, only they can help themselves learn how to see… it’s their own brain doing the work. This means that the collection of experiences built around the framework need to focus on a journey of understanding and discovery… gradually uncovering experientially how the vision system is pieced together, allowing the person to work with each step incrementally… understanding where they are in the grand scheme of things and what they can do about it.

The current iteration of the Endless Runner sample game (which is really there to initially help us develop the framework to help other people develop games) places far too much pressure on the player far too soon. It is necessary to first get a feeling for how an object feels when it is only shown in one eye vs. in both. This really is something one can learn quickly.

To achieve this, the player should initially just be able to explore… perhaps to find some missing objects. As they wander around, they learn by experience, that some boxes hurt and some don’t… we don’t even explain why… we let the user figure it out. Let them blink, let them “cheat” – it’s no longer cheating, it’s figuring out that there’s a difference between a object being in one or both eyes. Eventually, blinking will get boring, and the user is likely to develop in affinity simply due to the way neural networks learn.

Personally, I believe it would be a good thing to also allow the player to climb on, and jump from, and onto blocks which are seen by both eyes, whilst falling through blocks which aren’t (see below).

Only when a user is ready for a challenge, ready for a situation in which they are going to have to exert some will power, only then should they have the *choice* to enter that challenging situation.

Making more of the non-overlap

We believe we might now have see that we can avoid suppression being triggered, in some cases, by avoiding the brain’s conflict detection mechanisms from getting triggered… so lets make more use of that.

Why not, again, completely separate the scene into separated halves. Even as an endless runner, the course could be horizontally centred in the screen, requiring the user to control the player left and right to avoid falling off the path.

The player has to simultaneously respond to objects above and below the path, which offer danger or benefit, but which are seen entirely in the opposite eye. The player must then coordinate their vision to see which dangers or collectables can be reached (or are a threat) given where they are on the path, and whether the path will allow them to collect / avoid them.

Avoiding cheating (blinking) by temporal/ephemeral effects

Imagine in the climbing blocks elements of the game, the blocks randomly fade into and out of existence in both eyes (i.e. fading into existence in only one of either eyes). In this situation we are still working explicitly with overlap. This will be exercising the suppression mechanism in the brain quite intensely on one level – and what will happen there? Can we transform the feeling of suppression into an indicator that fusion is possible for that person?

Conclusion

Exciting and interesting times ahead!