The energy, the passion, the determination of this team never ceases to amaze me. More amazing progress.

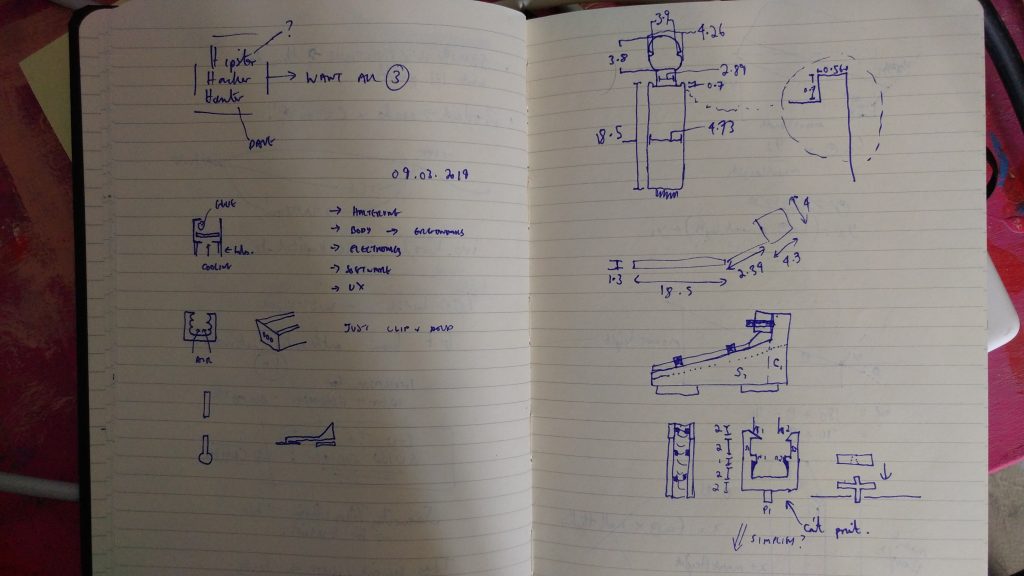

Let’s start talking about hardware:

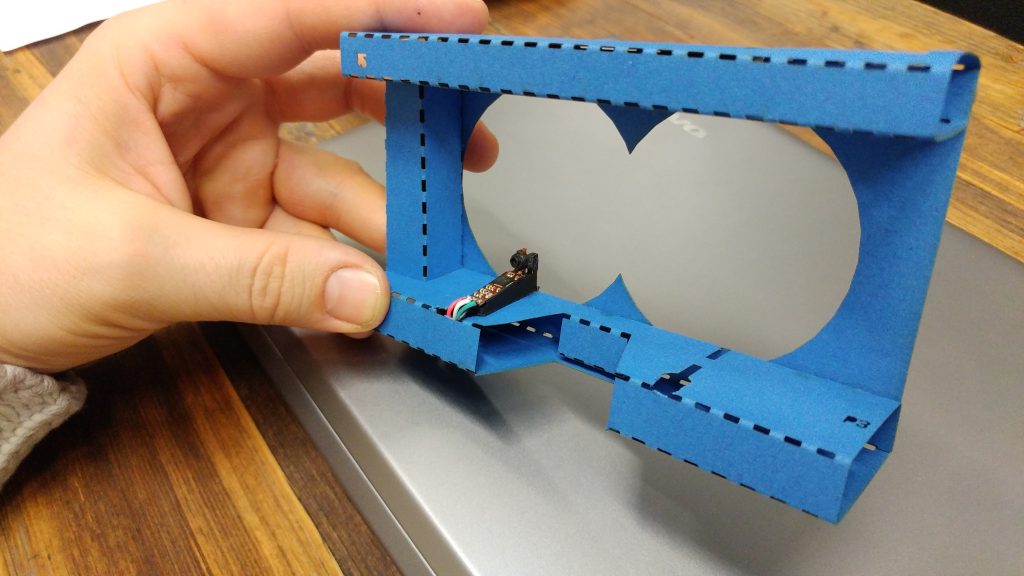

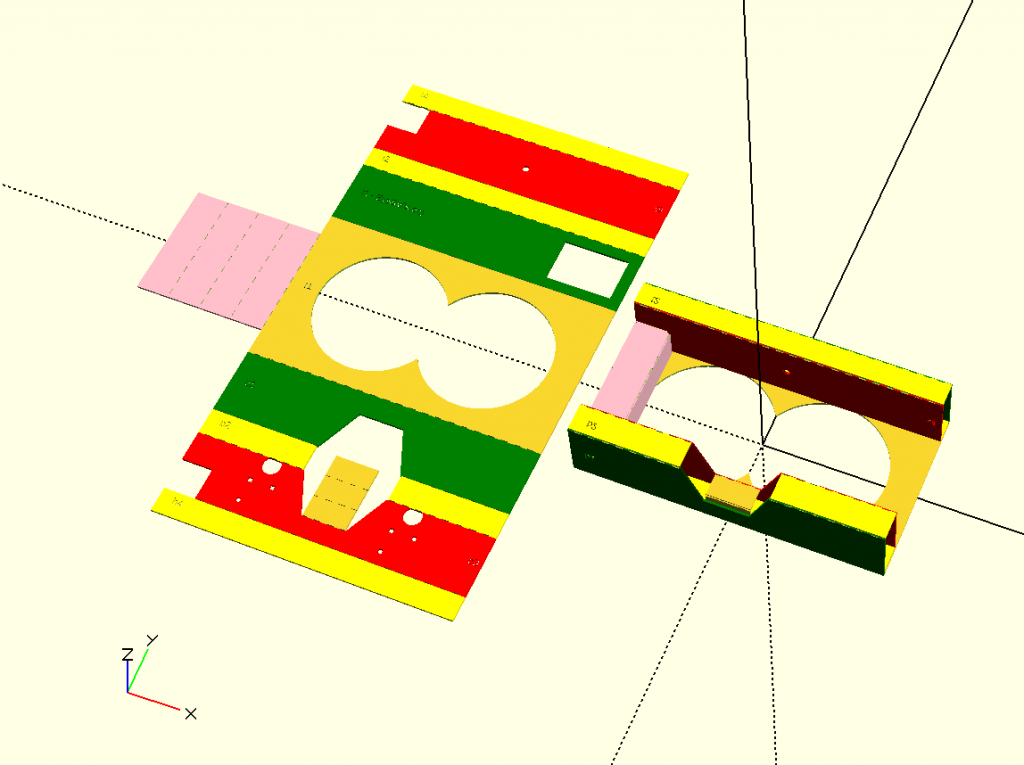

My focus was on continuing the work to produce a new central component for Google Cardboard (v2) capable of supporting eye tracking.

This is an intermediate design – laser cut from card, with one of the first 3D printed custom camera holders mounted and containing the internals of an endoscopic camera.

Laser cutters are a *lot* of fun!

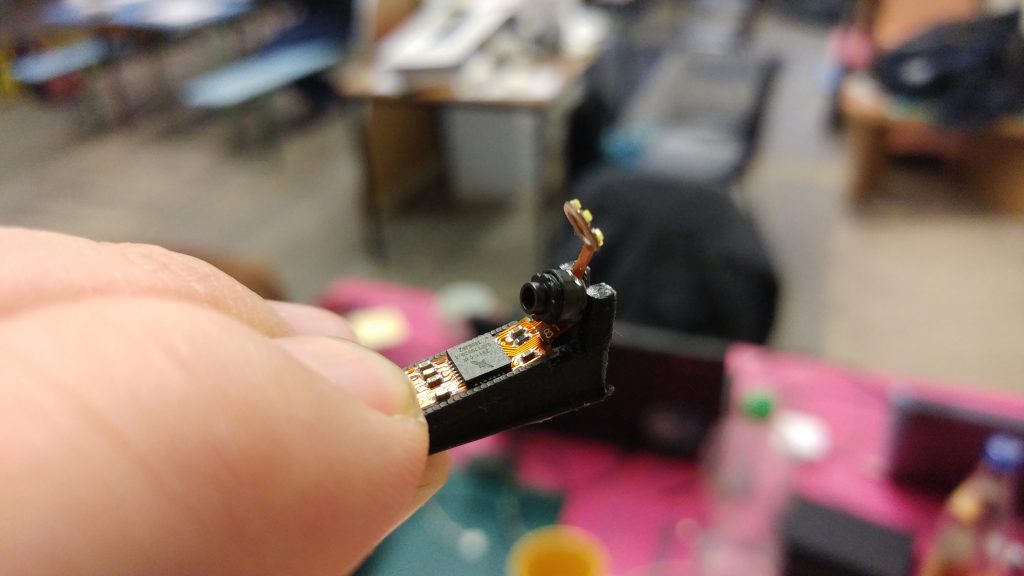

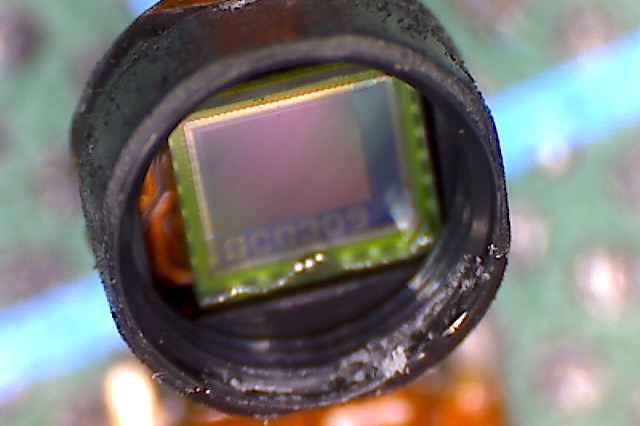

Here’s a closer look at the holder –

A later iteration has mounting slots and a redesign of the cardboard so that (with the help of a 3D printed tool) the mounts are always installed in the right direction and in the right location. The channel/slot running lengthways up the middle is there to allow heat from the metal backplate of the camera and PCB to escape.

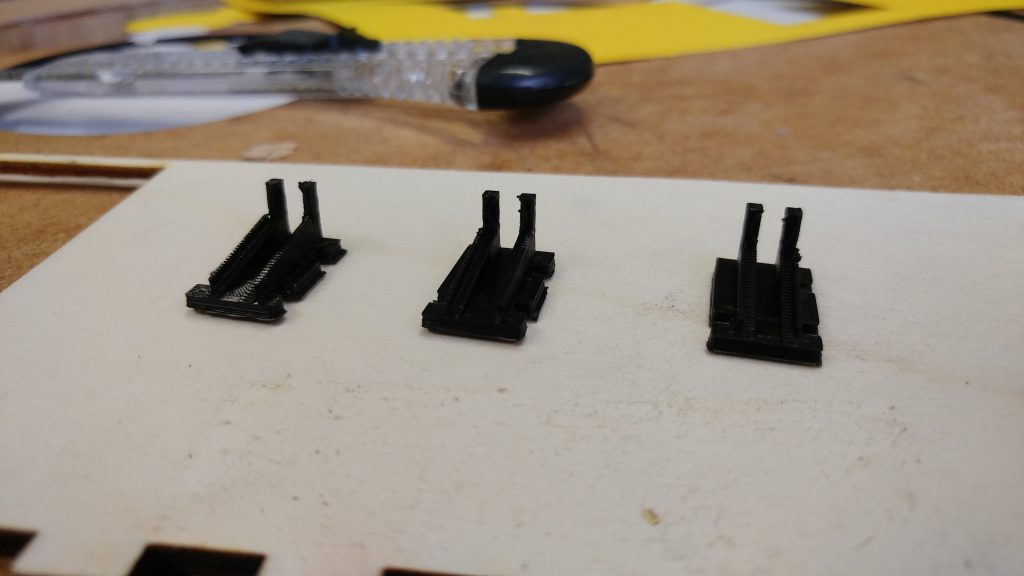

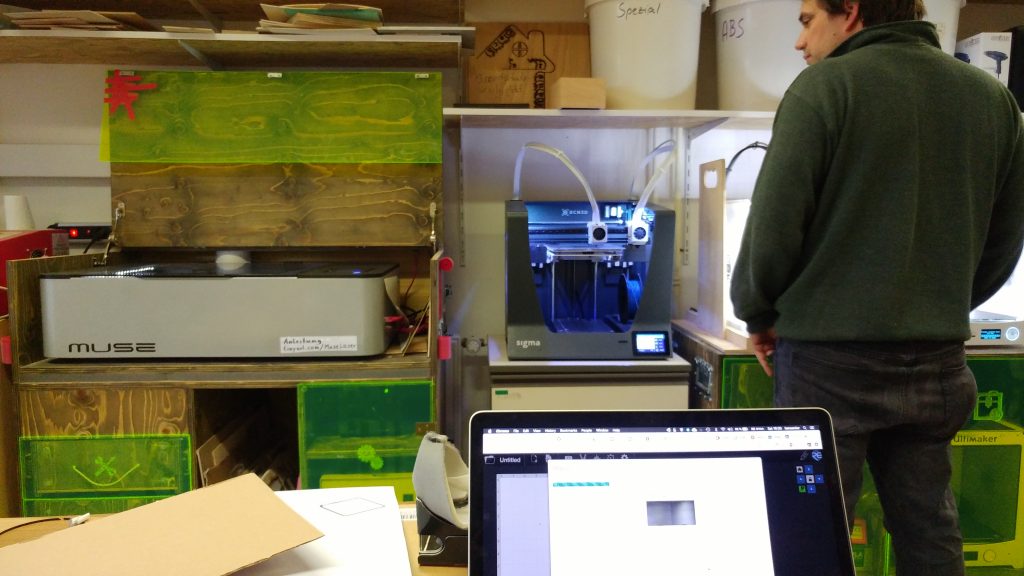

Designing these parts in OpenSCAD (they are fully parameterised so we can cope with different camera parts or headset designs) was a bit of a headscratcher at times. It took several iterations and alternative designs until I was happy.

…but I’m actually really pleased with the OpenSCAD description. I built it so it both shows the flattened cut, but also applies all the correct transformations to show what it would look like folded – very helpful when seeing what works best!

At the same time, Moritz was busy working out how to remove the IR filters from the camera. He ended up making a custom tool to unscrew the camera lens assembly, and had to break out the IR filters using brute force :

He also custom shortened and re-soldered the camera cables so we could mockup the prototype, and added an IR light source to replace the LED rings on the original cameras – resulting in our first IR shot of an eye!

Of course, we only managed to get that result because of the hard work put in by Johan, Andre and Rene (left to right) on interfacing Android with those cameras. The two main sticking points which are left are how to address both cameras simultaneously, and integrating OpenCV with Unity.

At the same time, what use is all this when a google cardboard is so uncomfortable and poorly fitting, letting in light and disturbing from what needs to be a concentrated experience?

Asieh’s incredibly intuitive and empathic design sense, coupled with Cong’s ability to synthesise many (often) divergent requirements resulted in what I think is a truly inspiring solution :

This is an add-on to a GCV2 which is amazingly comfortable, fits all heads, and blocks all light!

I also liked this “starwars” style prototype :

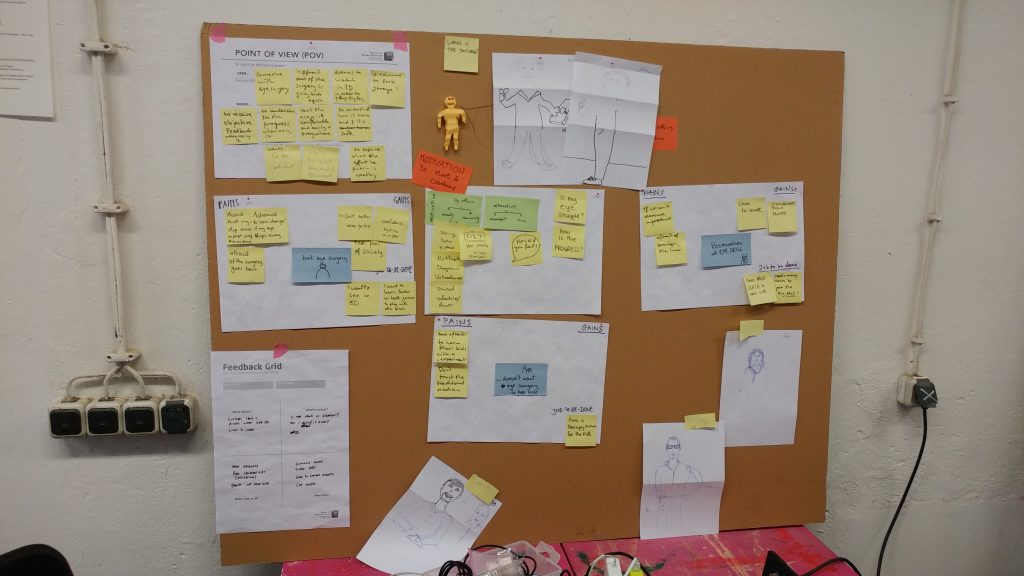

So, with hardware and ergonomics, we come to the other essential component which makes for a good experience… the User Experience! Flo (sadly, no picture?!?!) has an inspirational mind in a super relaxed soul.

After many boards full of analysis :

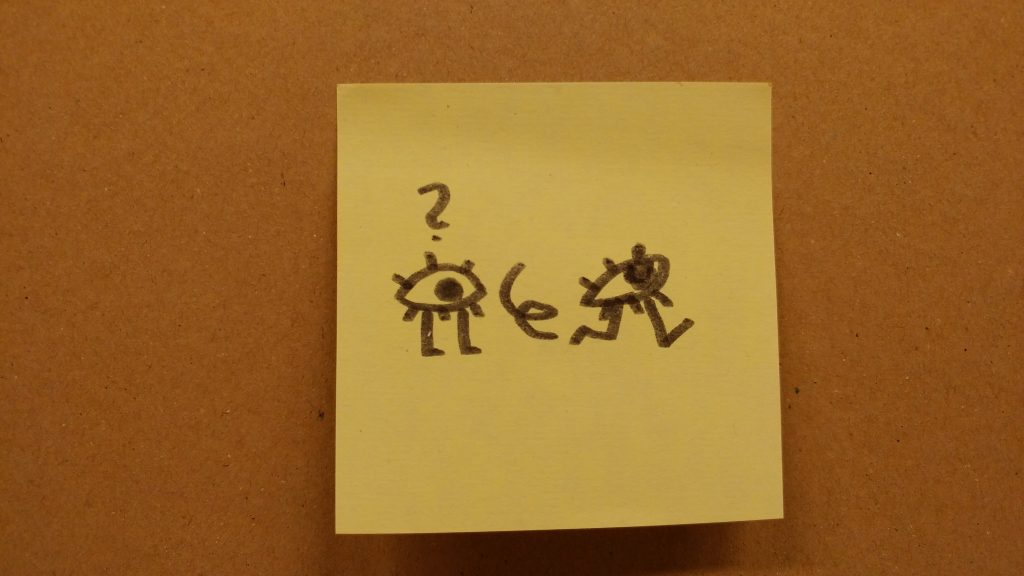

he drew this little cartoon “Eh?!? Where are you buggering off to?!?” which connected with old ideas I’d had from a story telling perspective many years ago. Together this is going to form the foundation for how we well the EyeSkills story and build the experience!

It’s been fourteen days straight with a level of intensity that is barely sustainable, but it’s been worth it. I’m looking forward to the third and final event happening on the coming event, and hope that we can all get our tasks finished in time this week!